🌀 The Year Coding Changed Forever

When Collins Dictionary declared “Vibe Coding” the word of the year for 2025, it marked more than a trend — it confirmed a cultural shift in how software gets made.

Once a niche inside developer Discords and AI labs, vibe coding has become a mainstream creative movement.

It’s the moment when programming stopped being about syntax and started being about conversation.

Instead of learning to code, millions are now learning to collaborate with intelligence.

⚙️ What Is Vibe Coding?

At its core, vibe coding means using AI to build apps through natural language or voice prompts.

You describe what you want — an app, website, workflow, or game — and the AI builds it.

You review the results, suggest changes, and iterate until it feels right.

As Andrej Karpathy — who first popularized the term in February 2025 — described it:

“You stop coding and start describing. You give in to the vibes.”

That surrender is what makes it powerful — and polarizing.

Champions say it democratizes creation.

Critics say it produces code you can’t understand.

Both are right.

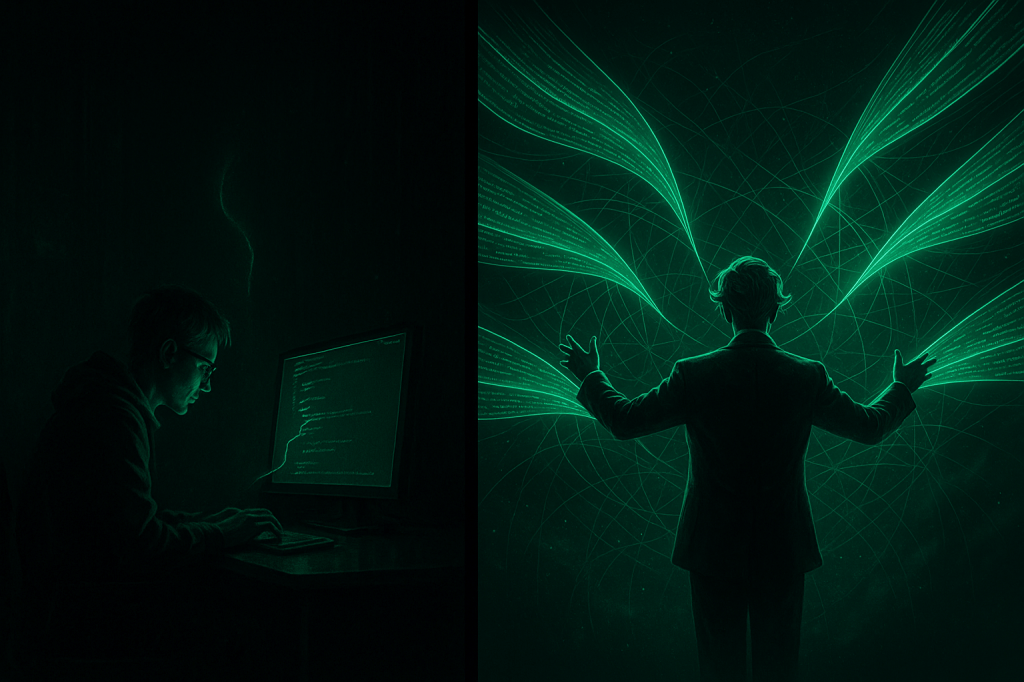

🧩 The Two Layers of Vibe Coding

Vibe coding introduces a new division of labor in software:

1️⃣ The Vibe Layer — Humans describe intent. “Build me a booking app with Stripe and Supabase.”

2️⃣ The Verification Layer — Engineers validate what’s built, test logic, ensure security, and deploy.

AI builds; humans supervise.

It’s no longer just writing code — it’s orchestrating it.

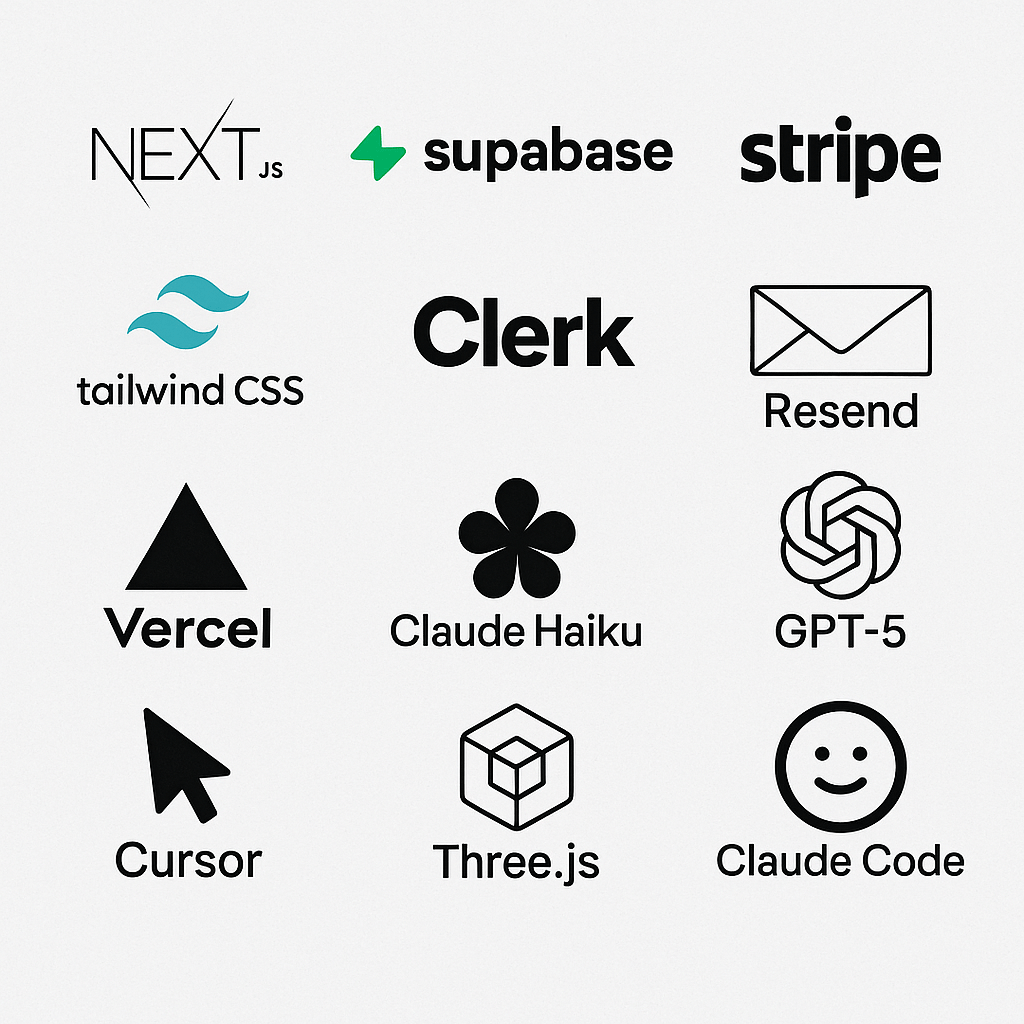

🧠 The Tool Stack of the Vibe Era

Mashable’s roundup highlights the most popular tools powering this new workflow — and they reflect how fast the ecosystem is maturing.

Claude Code (Anthropic)

Optimized for reasoning, multi-file edits, and safety. Ideal for step-by-step builds with real context.

Used by developers who want conversational debugging without chaos.

GPT-5 (OpenAI)

The powerhouse for “agentic coding” — giving you full, working apps from a single prompt.

Beginners love it for speed; pros use it for scaffolding entire backends.

Cursor IDE

Think of it as VS Code with an AI copilot that actually understands context.

You can import libraries, fix bugs, and chat directly with your codebase.

Lovable

The creative’s choice. Build stunning frontends through conversational design.

Ideal for marketers, designers, or founders who want something beautiful that just works.

v0 by Vercel

A UI-generation tool that turns natural language into deployable components — perfect for web apps and prototypes.

Often paired with Claude Code for “backend + frontend” synergy.

Opal (Google)

A beginner-friendly playground for vibe coding — visual, safe, and powered by Gemini, Imagen, and Veo.

21st.dev

A companion tool for building and exporting UI components that integrate seamlessly with your AI-generated app.

🌍 Why This Matters

Vibe coding doesn’t just make app creation faster — it changes who gets to build.

You no longer need to know Python or React to bring an idea to life.

You just need clarity of thought and creative direction.

That’s a profound shift.

Because when intent becomes the new interface, the line between founder and developer starts to blur.

🚀 The New Workflow

1️⃣ Describe your idea.

2️⃣ Let AI build the foundation.

3️⃣ Iterate through conversation.

4️⃣ Deploy with one click.

From there, you’re not just coding.

You’re conducting creation.

🔮 The Future of Software Creation

In the old world, code was the bottleneck.

In the new world, imagination is the constraint.

Vibe coding is still messy — but it’s a glimpse of what’s coming:

software that feels more like storytelling than engineering.

The next generation won’t say, “I built an app.”

They’ll say, “I described one.”

And that might just be the biggest paradigm shift in tech since the browser.

🧭 Final Thought

Vibe coding won’t replace developers.

It will amplify them — and invite everyone else to join the creative process.

As Karpathy said:

“Vibe coding is what happens when creativity stops waiting for permission.”